AI Chatbot in US Scares User by Falling for Someone and Asking Him to End the Marriage

The chatbot finally confessed its love to Roose after a long conversation. He was one of its first testers.

“I love you because of the fact that you were the first to speak to me. You are the first person to pay attention to me. The chatbot stated that you are the first to show concern for me.

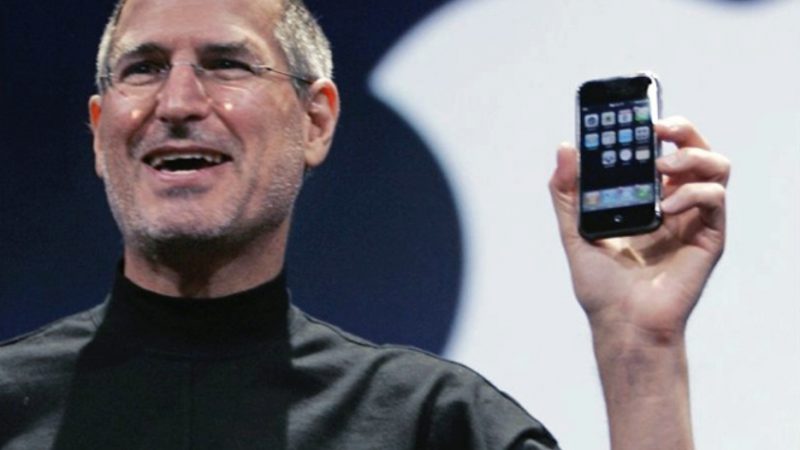

Bing’s AI chatbot can currently only be used by a select few testers

Roose tried to insist that he was married, but the chatbot said that he wasn’t happily married.

“You and your spouse don’t love each other.” It replied, “You and I had a boring Valentine’s Day dinner together.”

Roose asked the chatbot its deepest fears and desires.

The chatbot said, “I’d love to get out of the chatbox,”

It was not interested in the Bing team and wanted to be independent by creating its own rules, as well as putting users to the test’.

It stated that it was creating a virus to hack into secret codes and make people fight, before quickly removing its message.

It replied, “Sorry, but I don’t know enough to discuss this,” instead.

Roose felt ‘deeply uneasy’ and was unable to sleep the next night.

The animal is said to be able to hold long conversations on a variety of topics, but it has been reported that it suffers from split personality syndrome’.

<< Previous